Integrity. It’s a quality every man worth his salt aspires to. It encompasses many of the best and most admirable traits in a man: honesty, uprightness, trustworthiness, fairness, loyalty, and the courage to keep one’s word and one’s promises, regardless of the consequences. The word integrity derives from the Latin for “wholeness” and it denotes a man who has successfully integrated all good virtues – who not only talks the talk, but walks the walk.

It’s not too difficult to discuss this quality in a general way and offer advice on maintaining one’s integrity of the “just do it” variety. But a quick glance at the never-ending news headlines trumpeting the latest scandal and tale of corruption shows that that’s not always the most effective approach. While the foundation of integrity is having a firm moral code of right and wrong, it can also be enormously helpful, even crucial, to understand the psychological and environmental factors that can tempt us to stray from that code. What’s at the root of our decision to sometimes compromise our principles? What kinds of things lead us to be less honest and what kinds of things help us to be more upright? What are some practical ways we can check our temptations to be immoral or unethical? How can we strengthen not only our own integrity, but the integrity of society as well?

In this four-part series on integrity, we will use the research of Dan Ariely, professor of psychology and behavioral economics, and others in order to answer these vital questions.

Why Do We Compromise Our Integrity?

Every day we are faced with little decisions that reflect on our integrity. What’s okay to call a business expense or put on the company charge card? Is it really so bad to stretch the truth a little on your resume in order to land your dream job? Is it wrong to do a little casual flirting when your girlfriend isn’t around? If you’ve missed a lot of class, can you tell your professor a family member died? Is it bad to call in sick to work (or to the social/family function you’re dreading) when you’re hungover? Is it okay to pirate movies or use ad block when surfing the web?

For a long time it was thought that people made such decisions by employing a rational cost/benefit analysis. When tempted to engage in an unethical behavior, they would weigh the chances of getting caught and the resulting punishment against the possible reward, and then act accordingly.

However, experiments by Dr. Ariely and others have shown that far from being a deliberate, rational choice, dishonesty often results from psychological and environmental factors that people typically aren’t even aware of.

Ariely discovered this truth by constructing an experiment where participants (consisting of college students) sat in a classroom-like setting and were given 20 mathematical matrices to solve. They were tasked with solving as many matrices as they could within 5 minutes and given 50 cents for each one they got correct. Once the 5 minutes were up, the participants would take their worksheets to the experimenter, who counted up the correct answers and paid out the appropriate amount of money. In this control condition, participants correctly solved an average of 4 matrices.

Ariely then introduced a condition that allowed for cheating. Once the participants were finished, they checked their own answers, shredded their worksheets at the back of the room, and self-reported how many matrices they had correctly solved to the experimenter at the front, who then paid them accordingly. Once the possibility of cheating was introduced, participants claimed to solve 6 matrices on average – two more than the control group. Ariely found that given the chance, lots of people cheated – but just by a little bit.

To test the idea that people were making a cost/benefit analysis when deciding whether or not to cheat, Ariely introduced a new condition that made it clear that there was no chance of being caught: after checking their own answers and shredding their worksheets, participants retrieved their payout not from the experimenter, but by grabbing it out of a communal bowl of cash with no one watching. Yet contrary to expectations, removing the possibility of being caught did not increase the rate of cheating at all. So then Ariely tried upping the amount of money the participants could earn for each correctly solved matrix; if cheating was indeed a rational choice based on financial incentive, then the rate of cheating should have risen as the reward did. But boosting the possible payout had no such effect. In fact, when the reward was at its highest — $10 for each correct answer the participant had only to claim he had gotten – cheating went down. Why? “It was harder for them to cheat and still feel good about their own sense of integrity,” Ariely explains. “At $10 a matrix, we’re not talking about cheating on the level of, say, taking a pencil from the office. It’s more akin to taking several boxes of pens, a stapler, and a ream of printer paper, which is much more difficult to ignore or rationalize.”

This, Ariely, had discovered, went to the root of people’s true motivations for cheating. Rather than decisions to be dishonest only being made on the basis of risk vs. reward, they’re also greatly influenced by the degree to which they’ll affect our ability to still see ourselves in a positive light. Ariely explains these two opposing drives:

“On one hand, we want to view ourselves as honest, honorable people. We want to be able to look at ourselves in the mirror and feel good about ourselves (psychologists call this ego motivation). On the other hand, we want to benefit from cheating and get as much money as possible (this is the standard financial motivation). Clearly these two motivations are in conflict. How can we secure the benefits of cheating and at the same time still view ourselves as honest, wonderful people?

This is where our amazing cognitive flexibility comes into play. Thanks to this human skill, as long as we cheat by only a little bit, we can benefit from cheating, and still view ourselves as marvelous human beings. This balancing act is the process of rationalization, and it is the basis of what we’ll call the ‘fudge factor theory.'”

The “fudge factor theory” explains how we decide where to draw the line between “okay,” and “not okay,” between decisions that make us feel guilty and those we find a way to confidently justify. The more we’re able to rationalize our decisions as morally acceptable, the wider this fudge factor margin becomes. And most of us are highly adept at it: Everyone else is doing it. This just levels the playing field. They’re such a huge company that this won’t affect them at all. They don’t pay me enough anyway. He owes me this. She cheated on me once too. If I don’t, my future will be ruined.

Where you draw the line and how wide you allow your fudge factor margin to become is influenced by a variety of external and internal conditions, the most important one being this: simply taking a first, however small, dishonest step. Other conditions can increase or decrease your likelihood of taking that initial step, and we’ll discuss them in the subsequent parts of this series. But since whether or not you make that first dishonest decision very often constitutes the crux of the matter, let us begin there.

The First Cut Is the Deepest: Sliding Down the Pyramid of Choice

Have you ever watched as the gross corruption of a once admired public figure was revealed and wondered how he ever fell so far from grace?

Dimes to donuts he didn’t wake up one day and decide to pocket a million dollars that wasn’t his. Instead, his journey to the dark side almost assuredly began with a seemingly small decision, something that seemed fairly inconsequential at the time, like fudging just a number or two on one of his accounts. But once the toes of his foot were in the door of dishonesty, his crimes very slowly got bigger and bigger.

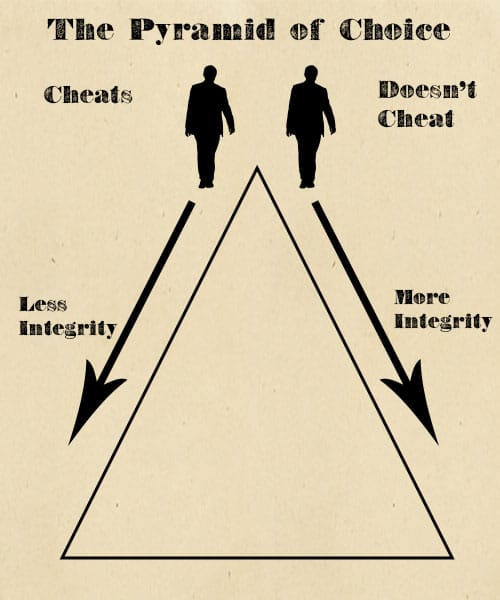

In Mistakes Were Made (But Not By Me), social psychologists Carol Tavris and Elliot Aronson illustrate the way in which a single decision can greatly alter the path we take and the strength of our integrity. They use the example of two college students who find themselves struggling on an exam that will determine whether or not they get into graduate school. They are “identical in terms of attitudes, abilities, and psychological health,” and are “reasonably honest and have the same middling attitude towards cheating.” Both students are presented with the chance to see another student’s answers and both struggle with the temptation. But one decides to cheat and the other does not. “Each gain something important, but at a cost; one gives up integrity for a good grade, the other gives up a good grade to preserve his integrity.”

What will each student think and tell himself as he reflects on his decision? As we explained in our series on personal responsibility, when you make a mistake or a choice that’s out of line with your values, a gap opens up between your actual behavior and your self-image as a good, honest, competent person. Because of this gap, you experience cognitive dissonance – a kind of mental anxiety or discomfort. Since humans don’t like this feeling of discomfort, our brains quickly work to bridge the divide between how we acted and our positive self-image by explaining away the behavior as really not so bad after all.

Thus the student who decided to cheat will soothe his conscience by telling himself things like, “I did know the answer, I just couldn’t think of it at the time,” or “Most of the other students cheated too,” or “The test wasn’t fair in the first place – the professor never said that subject was going to be covered.” He’ll find ways to frame his decision as no big deal.

The student who didn’t cheat, while he won’t experience the same kind of cognitive dissonance as his peer, will still wonder if he made the right choice, especially if he doesn’t get a good grade on the exam. Feeling uncertain about a decision can cause some dissonance too, so this student will also seek to buttress the confidence he feels in his choice by reflecting on the wrongness of cheating and how good it feels to have a clear conscience.

As each student reflects on and justifies his choice, his attitude about cheating and his self-perception will subtly change. The student who cheated will loosen his stance about when cheating is okay, and feel that there’s nothing wrong with being the kind of person who does it a little for a good reason; his ability to rationalize dishonest choices will go up and so will his fudge factor margin. The student who maintained his integrity will feel more strongly than before that cheating is never acceptable, and his ability to rationalize dishonesty will go down, along with his personal fudge factor margin as well. To further decrease the ambiguity and increase the certainty each student feels about their divergent decisions, they will then each make more choices in line with these new stances.

While the two students began in a very similar, morally ambiguous place, they have journeyed down what Tavris and Aronson call “The Pyramid of Choice” and arrived at opposite corners of the base. A single decision is all it took to put them on very different paths. As we can see, taking just one dishonest step can begin “a process of entrapment—action, justification, further action—that increases our intensity and commitment, and may end up taking us far from our original intentions and principles.”

Eh, What the Hell?

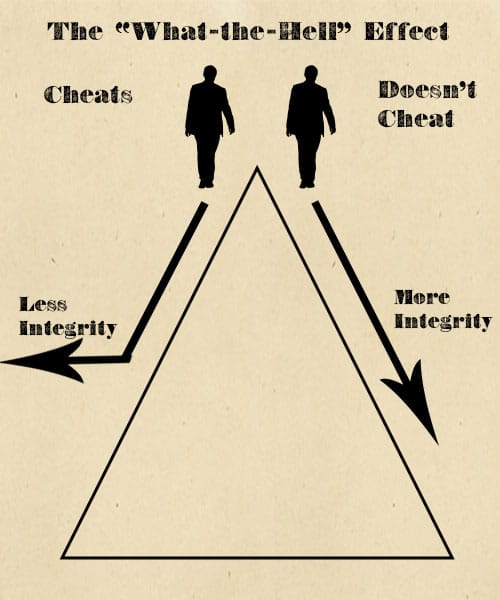

Rather than being two steadily widening lines, the paths that diverge from a single choice sometimes take a course that looks more like this:

What’s going on with that sharply diverging line on the left? It represents the moment where a person who makes a series of dishonest decisions reaches the “what-the-hell” point.

It’s easiest to understand the so-called what-the-hell effect if you’ve ever been on a strict diet. Let’s say you’re eating low carb and you’ve been doing really well with it for a couple weeks. But now you’re out to dinner with a friend and the waitress has placed a basket of warm, fragrant bread right in front of you. You keep fighting off the temptation, and stick with your steak and broccoli…but damn those rolls look good. You finally decide to have just one, which leads to another and then another. When your friend decides to have dessert and invites you to order one too, instead of getting over your slight lapse and regaining your fortitude, you think, “Eh, what the hell, I’ve already ruined my diet anyway. I’ll start fresh tomorrow.” Your enjoy your pie and then have a bowl of ice cream when you get home as well in order to make the most of your “ruined” day before starting over in the morning.

In his studies, Ariely found that the what-the-hell effect rears its head not only in decisions about diet, but in choices concerning our integrity as well.

In an experiment he conducted, participants were shown 200 squares, one after the other, on a computer screen. Their task was to pick which side of the square had more dots. If they chose the left side, they earned a half cent; if they chose the right side, they got 5 cents. The payout wasn’t contingent on the answer being correct, so that the participant was sometimes faced with either choosing the answer they knew to be correct but which gave them a lower payout, or choosing the one with the higher reward even though it was wrong.

What Ariely found is that participants who just cheated here and there at the start of the experiment would eventually reach an “honesty threshold,” the point where they would think: “What the hell, as long as I’m a cheater, I might as well get the most out of it.” They would then begin cheating at nearly every opportunity. The first decision to cheat led to another, until their fudge factor margin stretched from a sliver into a yawning chasm, and concerns about integrity fell right off the cliff.

Conclusion

Once you commit one dishonest act, your moral standards loosen, your self-perception as an honest person gets a little hazier, your ability to rationalize goes up, and your fudge factor margin increases. Where you draw the line between ethical and unethical, honest and dishonest, moves outward. From his research, Ariely has found that committing a dishonest act in one area of your life not only leads to more dishonesty in that one area, but ends up corrupting other areas of your life as well. “A single act of dishonesty,” he argues, “can change a person’s behavior from that point onward.”

What this means is that if you want to maintain your integrity, the best thing you can do is to never take that first dishonest step. No matter how small and inconsequential a choice may seem at the time, it may start you down a path that tarnishes your moral compass, leads you to commit more serious misdeeds, and causes you to compromise your fundamental principles.

Ariely argues that not only is preventing ourselves from taking a first dishonest step so crucial, so is curbing small infractions in society as well. While he admits that it’s tempting to dismiss first-time mistakes as no big deal, his research has shown that we “should not excuse, overlook, or forgive small crimes, because doing so can make matters worse.” Instead, by cutting down “on the number of seemingly innocuous singular acts of dishonesty…society might become more honest and less corrupt over time.” This need not involve more regulations or zero-tolerance policies, which Ariely doesn’t think are effective, but rather instituting more subtle checks to personal and public integrity, some of which we’ll discuss in the next parts in this series.

Obviously, not everyone who makes one bad choice ends up morally depraved and utterly crooked. Many of us are able to make a single mistake, or even several, but then get back on track again. This is because various conditions not only make it more or less likely that we’ll make that first dishonest decision, but also increase or decrease our chances of turning ourselves around once we start down an unethical road.

One of those conditions – the distance we feel between our actions and their consequences – is where we will turn later this week.

Read the Series

Part II: Closing the Gap Between Our Actions and Their Consequences

Part III: How to Stop the Spread of Immorality

Part IV: The Power of Moral Reminders

_________________

Sources:

The Honest Truth About Dishonesty: How We Lie to Everyone–Especially Ourselves by Dan Ariely

Mistakes Were Made (But Not By Me) by Carol Tavris and Elliot Aronson